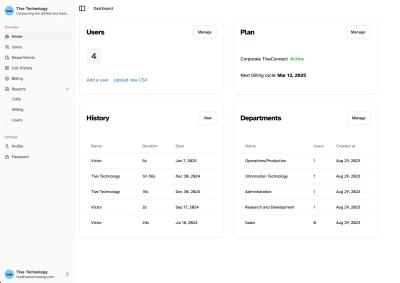

Tive Admin

2 min read

·

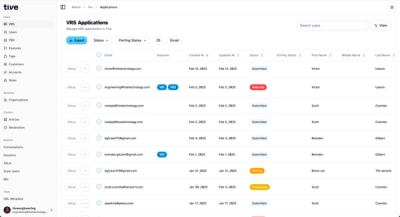

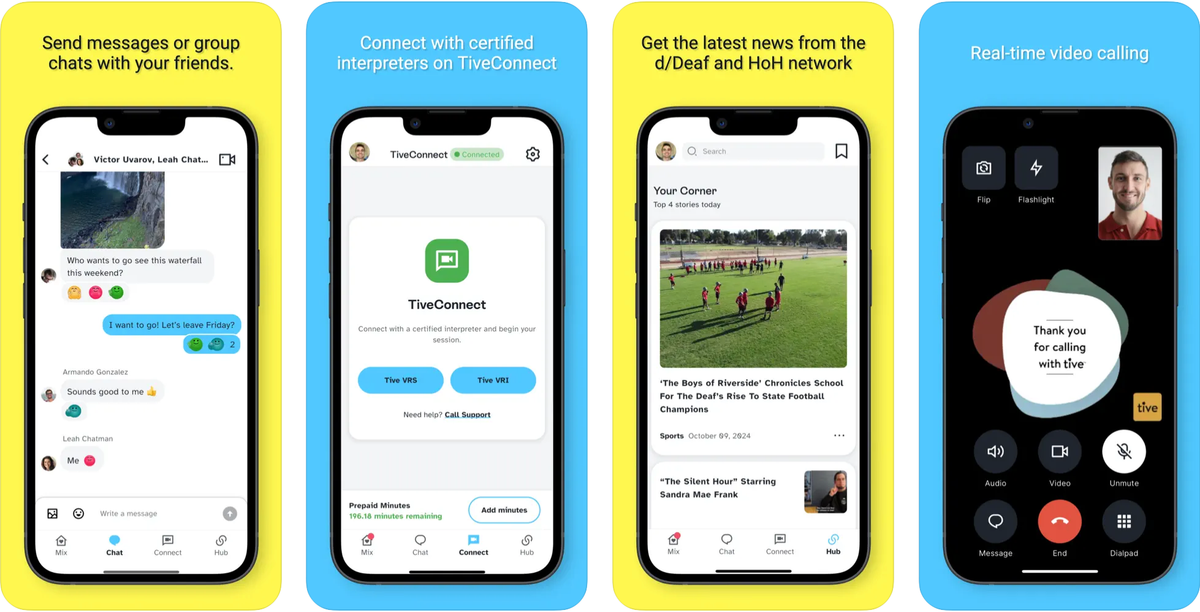

As the initial engineer on the project, I developed Tive, a platform initially conceived to connect members of the d/Deaf community with ASL interpreters on-demand. The concept was similar to Uber's model, but instead of connecting users with drivers, we aimed to provide immediate access to ASL interpretation services.

The first technical challenge was identifying and implementing suitable video chat technology. After evaluating several options, I initially selected Agora, a Real-Time Voice and Video platform, for the proof of concept. The platform offered straightforward implementation with minimal code required for room connectivity.

While the initial demos proved successful, as we moved toward production, we encountered limitations with Agora's customization capabilities. Specifically, we needed:

These requirements led us to migrate to Twilio's Video API, which provided:

The integration of ASL interpreter services proved more complex than initially anticipated. While we developed a preliminary system for interpreter support, we ultimately transitioned to a custom SIP over WebRTC video solution. This migration introduced several technical challenges:

Technical Requirements:

Implementation Challenges:

The experience provided valuable insights into both the technical and business aspects of developing specialized communication platforms for specific communities.